Scaling Kubernetes Workloads: Proactive Autoscaling using KEDA

In modern day cloud architecture designs, applications are broken down into independent building blocks known as Microservices. These microservices allow teams to be more agile and make deployments faster.

What is Event driven architecture?

Event-driven architecture is a software design pattern in which components are built to react upon events. One component will produce an event whereas a second component will react, as opposed to a request-driven architecture where a component initiates a request received from another component.

In an event-driven architecture, the first component will produce the event which the consumer will react to. This approach limits coupling between components; one is not aware of the other. The event-driven architecture will also allow the distribution of events to many subscribers.

Event-driven apps can be created in any programming language because event-driven is a programming approach, not a language. The Event-driven architecture enables minimal coupling, which makes it a good option for modern, distributed application architectures.

What is KEDA ???

KEDA(Kubernetes Event-Driven Autoscaling) is a single-purpose, lightweight, open-source component, built to activate a Kubernetes Deployment(s) based on events from various sources. KEDA works alongside standard Kubernetes components like the HPA and can extend its functionalities by allowing it to scale according to the number of events in the event source.

KEDA is a single-purpose and lightweight component that can be added into any Kubernetes cluster. KEDA works alongside standard Kubernetes components like the Horizontal Pod Autoscaler and can extend functionality without overwriting or duplication.

It was created by Microsoft and Red Hat in 2019 and then donated to the CNCF in March 2020. It installs as a Kubernetes Operator that adds or removes cluster resources in response to events emitted by various external data sources, such as Apache Kafka data pipelines and monitoring utilities such as AWS CloudWatch. KEDA connects to such external entities through a set of components called Scalers.

More than 30 Scalers are currently being supported by KEDA allowing developers and platform engineers to move beyond the native Kubernetes Horizontal Pod Autoscaler (HPA) and Vertical Pod Autoscaler (VPA) utilities, which scale according to available cluster CPU and memory resources.

KEDA provides a FaaS-like model of event-aware scaling, where deployments can dynamically scale to and from zero based on demand. KEDA also brings more event sources to Kubernetes.

As shared by As Jeff Hollan, the Principal Product Manager for Azure functions and serverless technology on Kubernetes at Microsoft, “By default Kubernetes can really only do resource-based scaling looking at CPU and memory and in many ways, that’s like treating the symptom, and not the cause. Yes, a bunch of messages will eventually cause the CPU usage to rise but what I really want to be able to scale on is if there’s a million messages in the queue that need to be processed.”

KEDA Architecture

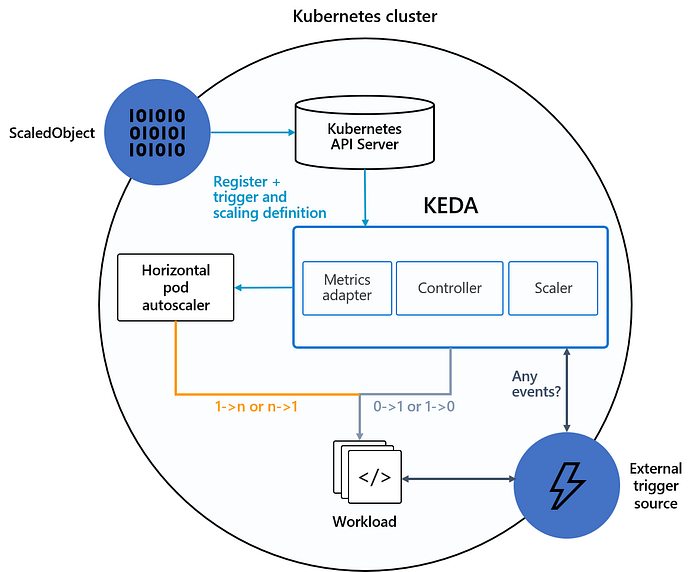

The diagram below shows how KEDA works in conjunction with the Kubernetes Horizontal Pod Autoscaler, external event sources, and Kubernetes’ etcd data store:

KEDA Features:

- Event-driven architecture

- Autoscaling Made Simple

- Built-in Scalers(30+ scalers)

- Support for Multiple Workload Types

- Vendor-Agnostic

- Azure Functions Support

Some useful Reference links for KEDA:

Recent Post

- Finding the Best Gambling Sites: Your Guide to Safe and Enjoyable Play

- Die top deutschen Sportwetten Anbieter im ausführlichen Vergleich und Test

- Как именно собственные цели влияют в рамках оценку достижения

- How to Download and Use the Aviator App in India

- Siti di casinò online non AAMS: Il significato della regolamentazione internazionale nel mantenere la sicurezza.

- Comment détecter un casino en ligne fiable pour jouer en toute sécurité